Compute profile configuration options

Categories:

Prior reading: Cloud apps overview

Purpose: This document provides detailed instructions for customizing the compute resources allocated to a cloud app through the web UI.

Note: This article does not apply to Dataproc cluster apps. To learn more about configuring a Dataproc cluster app, please see Use Dataproc (Spark) and Hail on Verily Workbench.

Introduction

The cost of running your cloud app primarily depends on the following factors:

- virtual machine type

- number and type of CPUs

- number and type of GPUs

- memory

- disk storage

These values are configurable via the Workbench UI, the Workbench CLI, and/or the Google Cloud console.

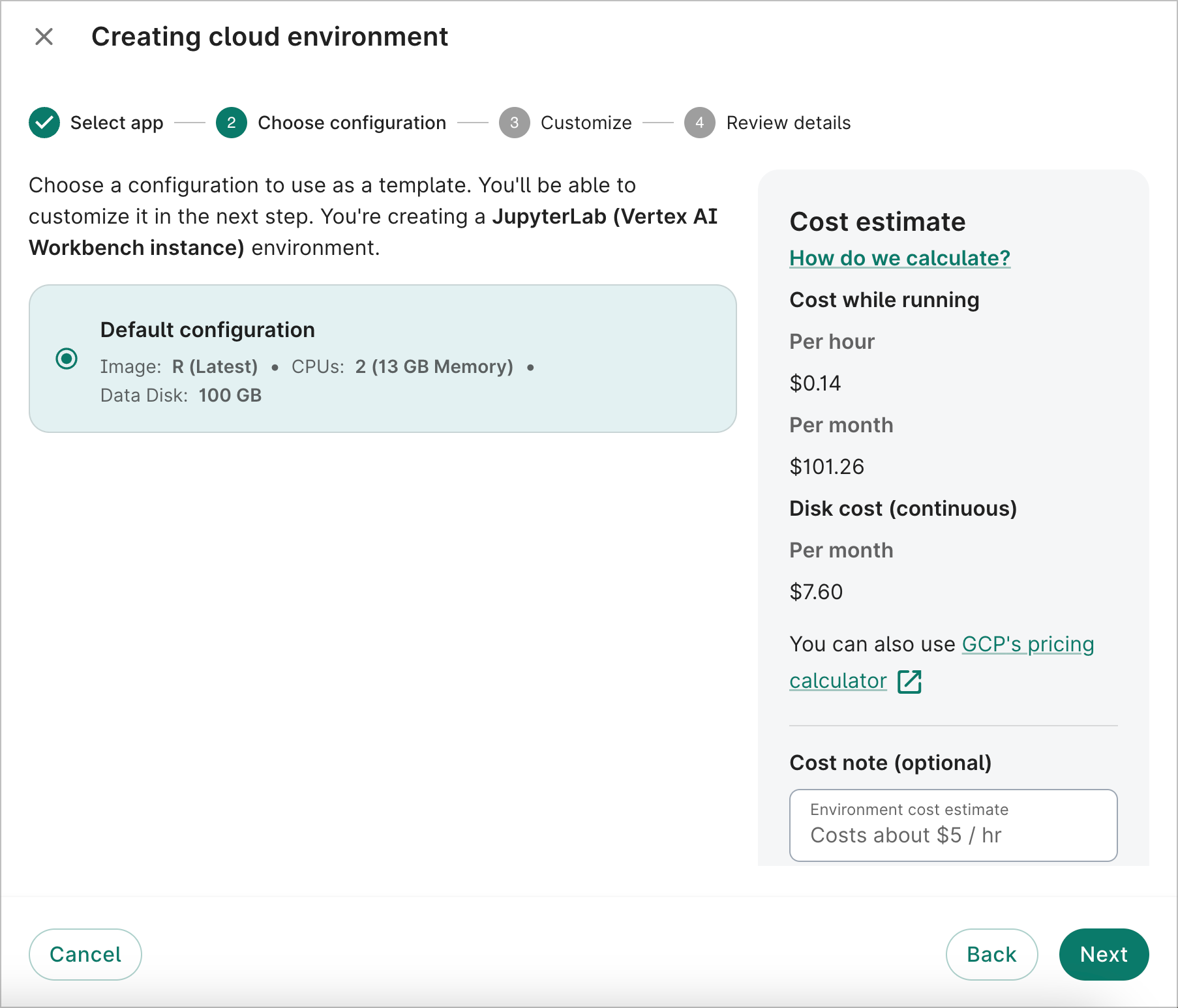

If you're creating a new app via the UI, you can view the Cost estimate on the right side of the Creating app dialog. The cost will dynamically change as you update the number of CPUs and/or GPUs.

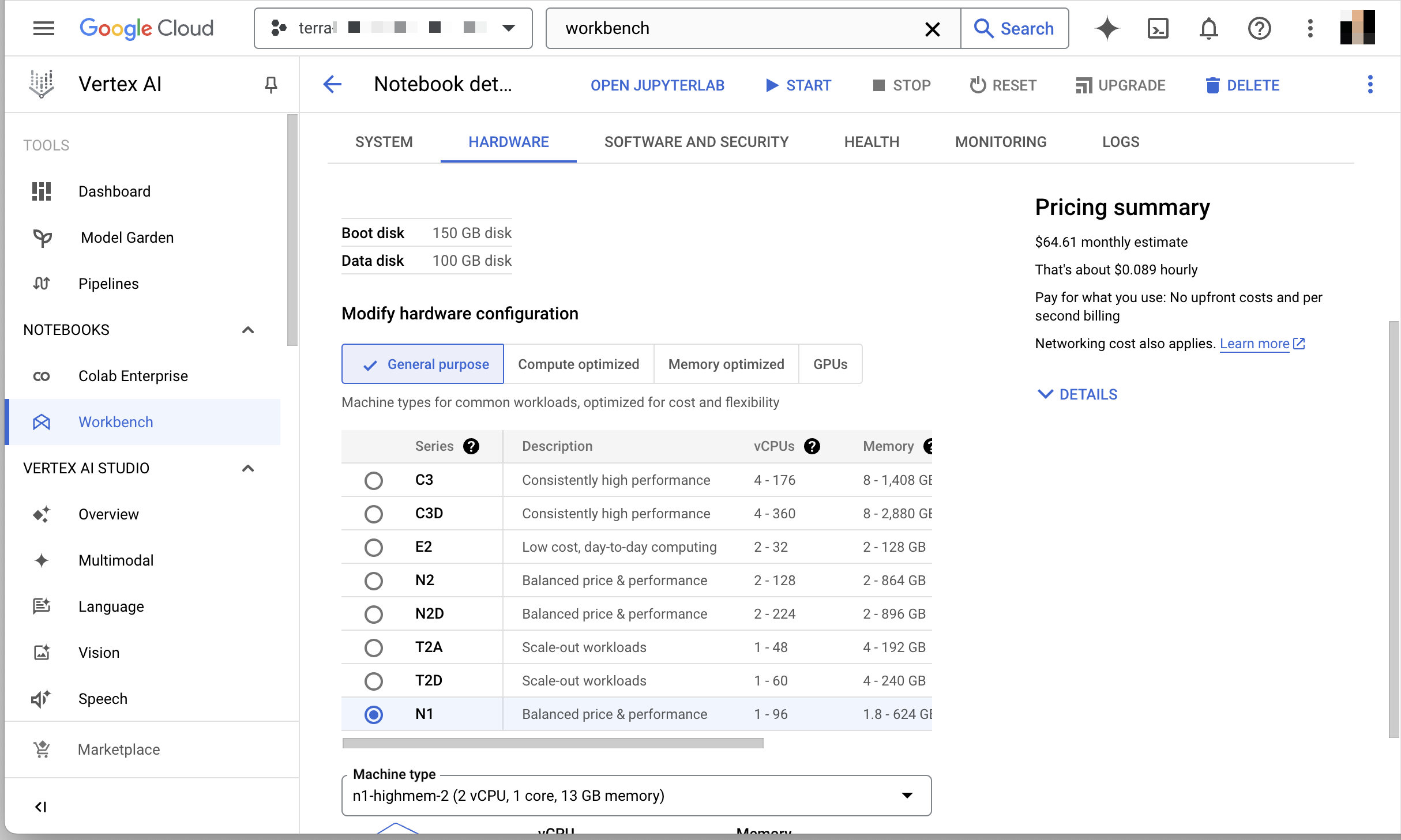

Once an app has been created, you can use the Google Cloud console to make configuration changes and view pricing summaries.

Tip

To access your project in Google Cloud console, go to your workspace's Overview page and click on the Google project link in the Workspace details panel on the right-hand side of your screen.

If you're running a Vertex AI Workbench notebook instance, you can follow the steps listed here for updating your configuration.

If you're running an R Analysis Environment, Visual Studio Code, or Custom Compute Engine instance, type compute engine in the Search box. Click the Compute Engine option. You should see your instance listed under VM instances. Click the instance name, and then click Edit to change your configurations.

Virtual machines

A virtual machine (VM) is an emulation of a physical computer and can perform the same functions, such as running applications and other software. VM instances are created from pools of resources in cloud data centers. You can specify your VM's geographic region, compute, and storage resources to meet your job's requirements.

You can create and destroy virtual machines at will to run applications of interest to you, such as interactive analysis environments or data processing algorithms. Virtual machines underlie Verily Workbench’s cloud apps.

By default, Workbench uses N1 high-memory machine types. To learn more about them, click here.

If you create an app via the wb resource create CLI command, you can specify a different VM type via the --machine-type option.

You can also change the VM type via the Google Cloud console after creating an app. Note that your VM options may be limited if your app uses GPUs.

Be aware

The type of VM you select will determine the number of CPUs and memory used. Therefore, it can have a major effect on your app costs.CPUs (central processing units)

The central processing unit (CPU), or simply processor, can be considered the “brain” of a computer. Every computational machine will have at least one CPU, which is connected to every part of the computer system. It’s the operational center that receives, executes, and delegates the instructions received from programs. CPUs also handle computational calculations and logic. Increasing the number of CPUs accelerates the processing of these tasks. Other types of processors (GPUs or TPUs) may be better suited for processing specialized tasks, such as parallel computing and machine learning.

New apps based off of JupyterLab, R Analysis Environment, and Visual Studio Code apps will include an N1 high-memory VM, two CPUs, and 13 GB of total memory by default.

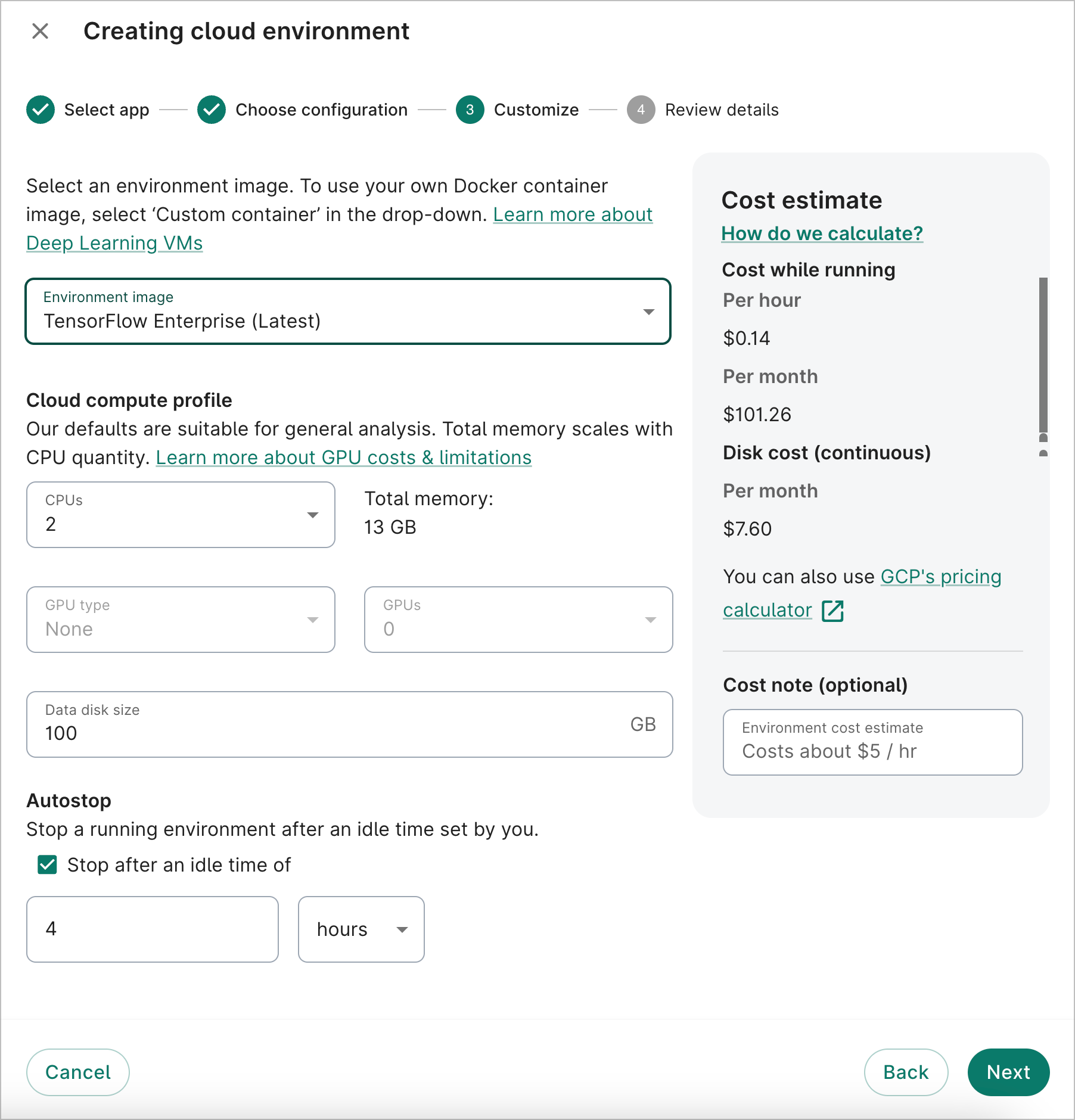

If you think you'll need more than two CPUs, you can add them via the Workbench UI at the time of creation.

If you need to add additional CPUs after you've created the app, you can update the compute profile via Google Cloud console as described above.

Note

The memory amount will automatically scale with the number of CPUs.GPUs (graphical processing units)

A graphical processing unit (GPU) is a specialized processor that excels at parallel computing, which means processing many tasks at once. While a central processing unit (CPU) must process tasks one by one, a GPU can split complex tasks into many pieces and work through them simultaneously. GPUs were traditionally used to accelerate video rendering, but their parallel computing ability also makes them ideal for tasks such as sequence alignment, AI, and machine learning.

If you're creating an app via the Workbench UI, there are several types of GPUs available for apps using PyTorch or TensorFlow Enterprise app images:

- NVIDIA Tesla P4

- NVIDIA Tesla T4

- NVIDIA Tesla V100

GPUs are not included in new apps by default. However, they can be added when you create your app via the Workbench UI or Workbench CLI. See Create a notebook app for creating an app via the Workbench CLI. You can also add GPUs via the Google Cloud console after the app's been created.

Be aware

Use of GPUs will increase the running cost of a VM per hour. This makes it particularly important to stop a GPU-enabled app when you're not using it.You can learn more about GPUs on Google Compute Engine here, and see more details about GPU pricing here.

Memory

Memory, also known as random access memory (RAM), is where programs and data that are currently in use are temporarily stored. The central processing unit (CPU) receives instructions and data from programs, which are kept in the computer’s memory while being used. Once the instructions are completed, or the program is no longer in use, the memory is freed up. If the computer system doesn’t have enough memory for all of the CPU’s instructions, the system’s performance will diminish and slow down. While the CPU is commonly thought of as a computer’s brain, you can think of memory as the attention span.

The memory amount automatically scales when the number of CPUs is changed.

Disk storage

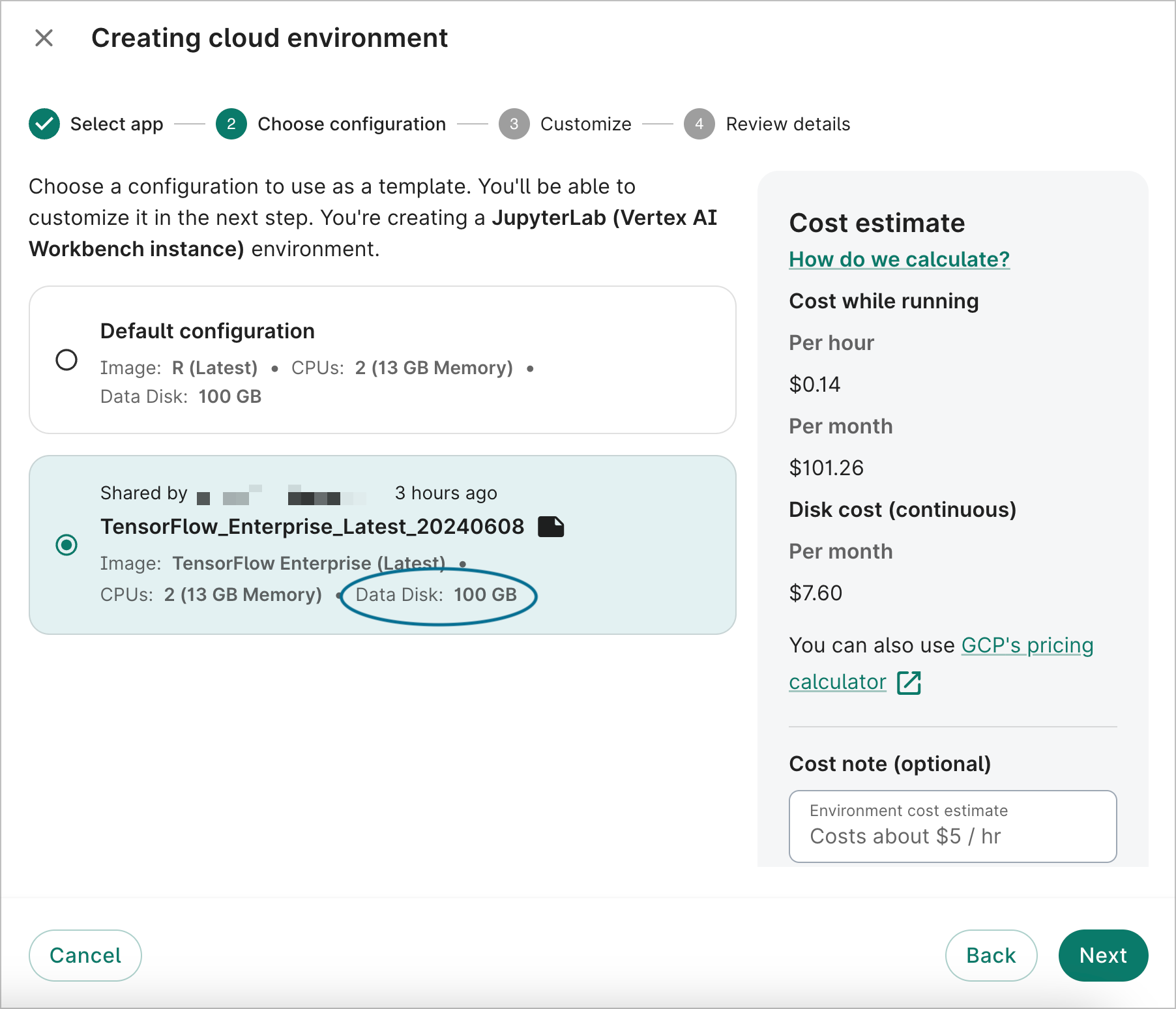

By default, your app's data disk size will be 100 GB for Vertex AI Workbench instances and 500 GB for Compute Engine instances.

You can change the size when creating a new app via the UI. The data disk can be as small as 10 GB, but the minimum recommended size is 100 GB. The maximum allowed is 64,000 GB (64 TB).

If you select a shared configuration template in the Choose configuration step, the app will inherit the specified data disk size. However, this amount can be updated in the Compute options step.

If you're creating a new app via the CLI, you can change this value via the --data-disk-size option.

Autostop

Your app includes an autostop feature that will automatically stop running apps after a set idle time. The default idle time is 4 hours, but you can change it to any length between 1 hour and 14 days. This can be configured at the time of app creation or updated after creation via the Workbench UI or Workbench CLI. You can also choose to disable the autostop feature.

Last Modified: 12 November 2024